修改scrapytutorial目录settings.py文件

USER_AGENT = ‘Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36’

这里以抓取博客园文章为例

在spiders目录添加blog_spider.py文件1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19import scrapy

from scrapy.selector import Selector

from scrapy.http import HtmlResponse

class BlogSpider(scrapy.Spider):

name = 'blog'

start_urls = {

'https://www.cnblogs.com/chenying99/'

}

def parse(self, response):

container = response.css('#main')[0]

posts = container.css('div.post')

for article in posts:

title = article.css('a.postTitle2::text').extract_first().strip()

link = article.css('a.postTitle2::attr(href)').extract_first()

yield {'标题': title, '链接': link}

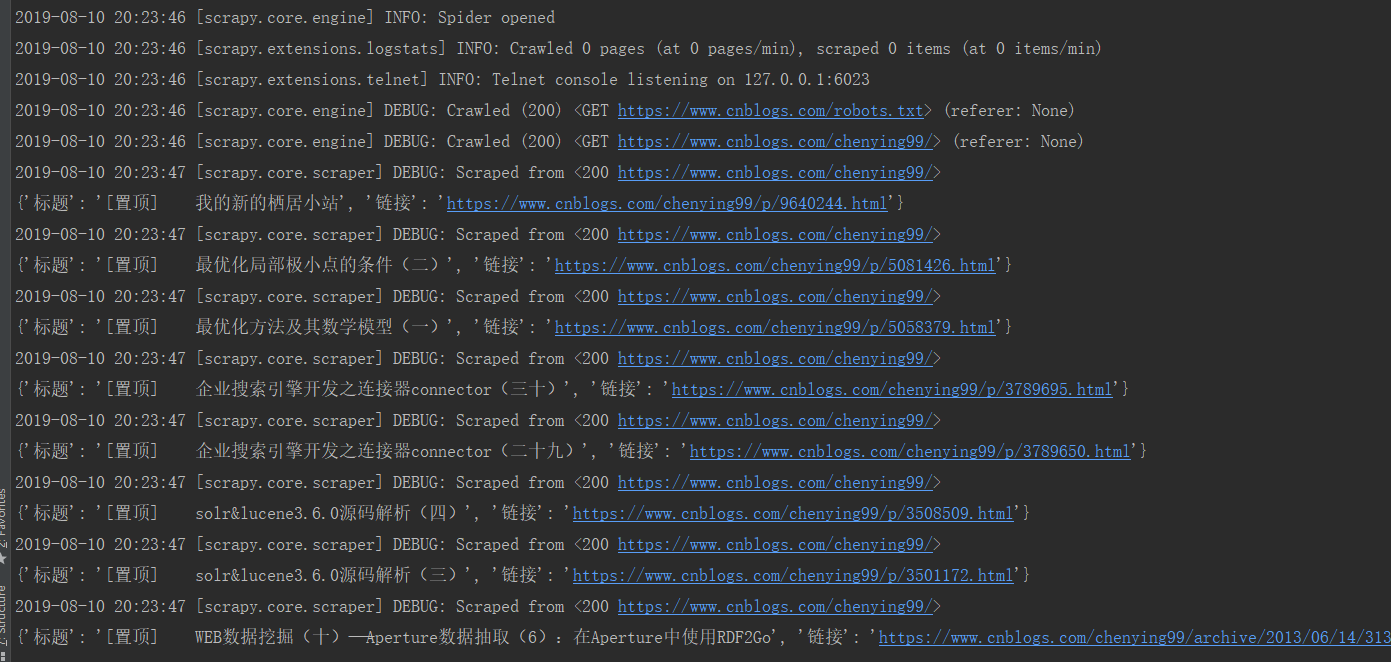

运行scrapy crawl blog

补充:获取html标签的css选择器,SelectorGadget plugin